Will the Hoped-For Rewards from Improved Interoperability and Reduced Information Blocking Outweigh the Potential Impact on the Privacy and Security of Personal Health Information?

Jon Moore, MS, JD, HCISPP, Chief Risk Officer and Senior Vice President, Consulting Services, Clearwater

Originally published by AHLA’s Health Information and Technology Practice Group, September 2020.

On May 1, 2020, the Department of Health and Human Services (HHS) published in the Federal Register two new final rules (Final Rules) targeted at improving interoperability and patient access to health information: one from HHS’ Office of the National Coordinator for Health Information Technology (ONC) and the other from its Centers for Medicare & Medicaid Services (CMS).

In its March 9, 2020, press release, HHS stated that “these final rules mark the most extensive healthcare data sharing policies the federal government has implemented, requiring both public and private entities to share health information between patients and other parties while keeping that information private and secure.” While few will argue the extensive nature and impact of the new Rules on data sharing, the claims of keeping that information private and secure have come under much debate. The concerns about privacy and security focus mainly on the new requirements for standards-based, publicly available web application programming interfaces (APIs) for certified health IT systems.

This article describes the Final Rules generally and, more specifically, the one-two punch of information blocking penalties and new API requirements that they introduce. To help the reader understand the implications of the new API requirements, APIs are explained, along with the evolution of APIs in health care technology. Finally, this article examines the risks arising from the implementation of APIs in the health care arena.

It is too early to understand if the industry will see the hoped-for benefits of improved care and reduced cost as a result of the Final Rules. It is also too early to know if those benefits will outweigh any potential negative impact on the privacy and security of electronic health information. The information presented here, however, provides the reader with sufficient background to make their own determination as the health care industry realizes the results of the Final Rules.

The new final rules on interoperability, information blocking, and application programming interfaces

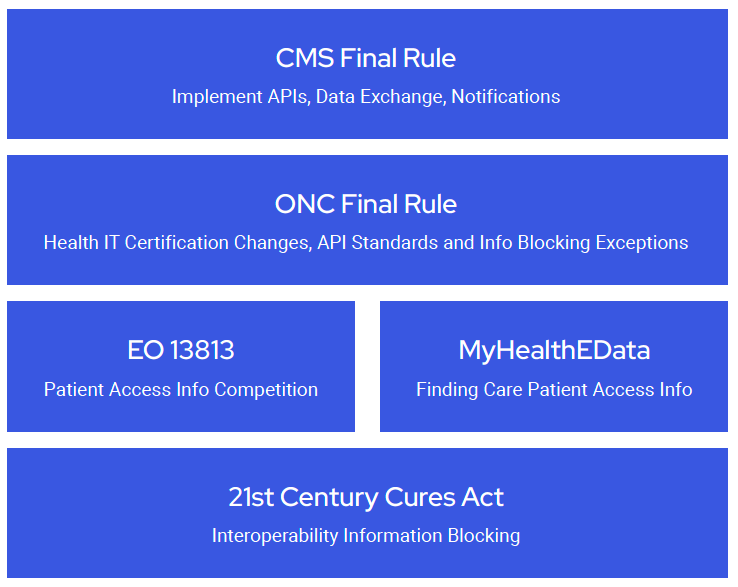

The two Final Rules implement portions of the 21st Century Cures Act (Cures Act), contribute to fulfilling Executive Order (E.O.) 13813, and support President Trump’s MyHealthEData initiative. It is impossible to understand the objectives of the new Final Rules without some background on this law and these executive actions. Each is briefly summarized below.

Passed in 2016, the Cures Act targeted streamlining the drug and medical device approval processes. In addition, Title IV of the Cures Act defined the term

“interoperability” for health information technology and established penalties for information blocking with fines of up to $1 million per violation. These provisions were designed to promote the use of electronic health records to improve care, empower patients, and improve their access to their electronic health information.

E.O. 13813, Promoting Healthcare Choice and Competition Across the United States, was signed by President Trump on October 12, 2017. The E.O. is intended to “re-inject competition” into healthcare markets and improve Americans’ access to quality of information, thereby allowing them to make better-informed health care decisions.

CMS Administrator Seema Verma announced the MyHealthEData initiative on March 6, 2018. This initiative “aims to empower patients by ensuring that they control their healthcare data and can decide how their data is going to be used, all while keeping that information safe and secure.” Ultimately, the initiative aims to facilitate every American’s ability to find the providers and services that best meet their unique health care needs and give that provider secure access to their health information.

The ONC Final Rule, titled the 21st Century Cures Act: Interoperability, Information Blocking, and the ONC Health IT Certification Program, implements the interoperability provisions of the Cures Act to facilitate the flow of information between providers, payers, and patients. ONC hopes to achieve this by changing

its health information technology certification program, regulating information blocking, and implementing standards for APIs for use in the exchange of health care information.

The CMS Final Rule is titled the Medicare and Medicaid Programs; Patient Protection and Affordable Care Act; Interoperability and Patient Access for Medicare Advantage Organization and Medicaid Managed Care Plans, State Medicaid Agencies, CHIP Agencies, and CHIP Managed Care Entities, Issuers of Qualified Health Plans on the Federally-facilitated Exchanges, and Health Care Providers. As the name implies, this Final Rule applies to Medicare Advantage (MA), Medicaid, CHIP, and Qualified Health Plan (QHP) issuers on Federally-facilitated Exchanges (FFEs). The CMS Final Rule requires payer-to-payer data exchange, following the ONC’s API standards in the implementation of two specific APIs, adopting conditions of participation (CoP) notice requirements, and publicly reporting providers that may be information blocking.

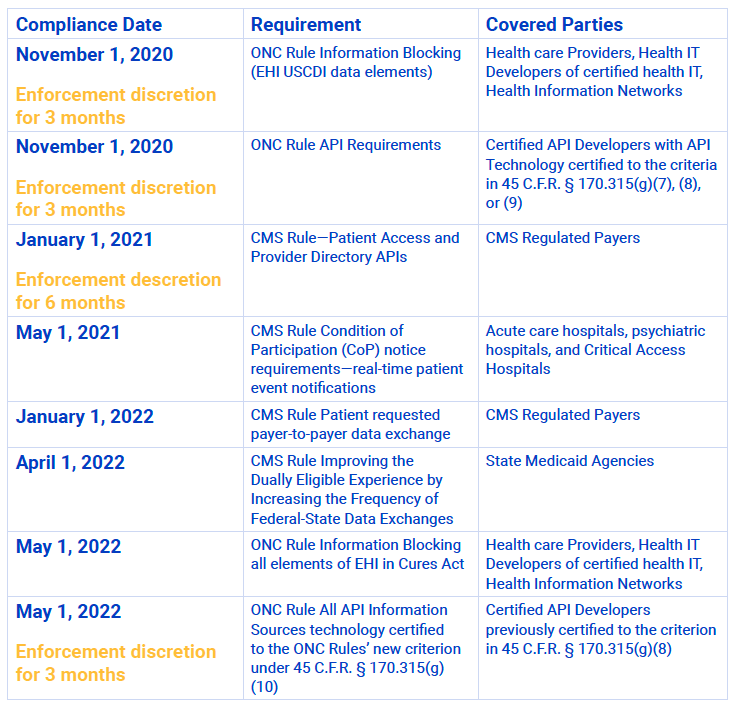

Compliance dates

There are several different compliance dates contained within the Final Rules. HHS has already extended or announced a period of enforcement discretion for several of these dates due to COVID-19. While organizations that fall within the scope of these Final Rules should watch for further changes, the current compliance dates are as follows:

Interoperability and information blocking

For several decades, it has been the belief of many people within the health care industry and federal government that the digitization of health care information is the key to improving patient care while simultaneously reducing the cost of that care. They envision a world where an individual’s health information flows freely between patient, provider, payer, researcher, and regulator. They hypothesize first that improved care will come from providers and patients having the information necessary to make appropriate and timely diagnosis and treatment decisions and second that cost savings will come from reduced friction in the flow of information, transparency or visibility into the cost of care, and the opening up of the health care market to increased competition.

This belief has driven much of the health care thinking and legislative activity since 1996’s Health Insurance Portability and Accountability Act (HIPAA). Beginning in 2011, it also resulted in the distribution of billions of dollars in federal incentives to qualified health care professionals and hospitals for the meaningful use of ONC-certified electronic health records (EHRs) under the Health Information Technology for Economic and Clinical Health Act (HITECH). Despite the legislative action and investment, the hoped-for benefits of improved care and reduced cost remain elusive.

In late 2013, a study was completed for the Agency for Healthcare Research and Quality (AHRQ) by JASON, an independent panel of experts. The results published in a paper titled, A Robust Health Data Infrastructure, indicated that:

- The current lack of interoperability among data resources for EHRs is a significant impediment to the exchange of health information and the development of a robust health data infrastructure. Interoperability issues can be resolved only by establishing a comprehensive, transparent, and overarching software architecture for health information.

- The goals of improved health care and lowered health care costs can begin to be realized if health-related data can be explored and exploited in the public interest, for both clinical practice and biomedical research. That will require implementing technical solutions that both protect patient privacy and enable data integration across patients.

The release of this study changed the focus of the proponents of digitization. Their attention moved from the broader effort of promoting the adoption of electronic health information technology to overcoming the more specific hurdles to improving interoperability. Their chosen method for doing so is imposing standards for the exchange of health information, requiring the implementation of APIs to facilitate the exchange, and punishing any resistance, referred to as information blocking.

Section 4003 of the Cures Act officially defines “interoperability” as health information technology that:

A. enables the secure exchange of electronic health information with, and use of electronic health information from, other health information technology without special effort on the part of the user;

B. allows for complete access, exchange, and use of all electronically accessible health information for authorized use under applicable State or Federal law; and

C. does not constitute information blocking, as defined in section 3022(a).

ONC has stated that “without special effort,” as used here, means a process that is standardized, transparent, and pro-competition.

Information blocking is defined in Section 4004 of the Cures Act as a practice that is likely to interfere with, prevent, or materially discourage access, exchange, or use of electronic health information (EHI). A health IT developer, exchange, or network engage in information blocking only if they know or should know that the practice is likely to interfere with, prevent, or materially discourage the access, exchange, or use of EHI. A health care provider engages in information blocking only if the provider knows that such practice is unreasonable and is likely to interfere with, prevent, or materially discourage access, exchange, or use of EHI.

To further refine this definition, the Cures Act requires the Secretary of HHS to define through rulemaking reasonable and necessary activities that do not represent information blocking. The Secretary adopted this definition of information blocking in the ONC Final Rule, setting out two categories of exceptions.

The first category of information blocking exceptions involves permissible reasons to deny requests to access, exchange, or use EHI. These exceptions are listed in the ONC Final Rule as:

- Preventing Harm Exception

- Privacy Exception

- Security Exception

- Infeasibility Exception

- Health IT Performance Exception

For each exception in this category, the ONC Final Rule describes the conditions under which an organization can deny a request without penalty.

The second category of information blocking exceptions involves the procedures followed in fulfilling requests to access, exchange, or use EHI. These exceptions include:

- Content and Manner Exception

- Fees Exception

- Licensing Exception

Unlike the first category of exceptions that focuses on circumstances under which a request may be denied, this second category focuses on the process through which a request will be honored. In other words, these exceptions target actions by an organization that might be viewed as discouraging or hindering a request as opposed to outright preventing or denying it.

As mentioned above, Section 4004 of the Cures Act defines a potential penalty of up to $1 million per violation for health IT developers and information networks determined by the Inspector General to have engaged in information blocking. Health care providers who are found to engage in information blocking, on the other hand, will be referred to CMS if they have made a fraudulent attestation under the Promoting Interoperability Program or to the Office for Civil Rights (OCR) if the activity results in a potential HIPAA violation.

What is an API and why are APIs important?

APIs are formal connection points provided by an application through which another application can get data or services from the first. The second application must ask for the data or service following prescribed methods and formats and must make its request at a specific location. The instructions provided by the developer of the first application describing how to ask and what to expect as a result of asking, where to connect, and the software that facilitates the connection collectively form the API.

A benefit of the API is that, with a defined connection point and instructions for accessing it, the application doing the asking doesn’t need to know how the recipient of the request will go about providing the data or service (the implementation details). All the connecting application needs to understand is where to connect, the format to follow in making its connection, and what it will get in return, including the nature of the data or service, as well as its format. Generally speaking, this dramatically simplifies the ability for one application to connect to another and reduces the cost associated with making connections. This cost savings is particularly substantive if there will be future additional applications wanting to join that will now be able to leverage the now-established API.

In addition to the potential cost savings, the API provider also benefits by maintaining control over and access to its system. By defining the API, the provider controls the information and services available through the API. The provider may also make changes to the code within the application itself without impacting the API and

the ability of other apps to connect. In this way, an application developer has more freedom and flexibility in its application’s growth and development.

As alluded to above, APIs consist of two primary elements. The first element is the technical specification, including instructions on how to use the API or how to ask for information or services from the application and what to expect as a result. The second element of an API is the software interface written to the specification. The interface is the software connection point through which the asking is done using language the system can interpret, as described in the technical specification.

Experts often talk of APIs as contracts. The technical specification is essentially an offer that says, if one follows the spec, they will get the described results. Sending a request to the API is an acceptance of this offer. The response represents the fulfillment of the contract. Whether all elements necessary to create an enforceable legal contract from an API exist depends on the specific circumstances. Still, the concept helps understand the relationship between an API provider and those accessing the API.

APIs are also sometimes categorized by who is allowed to use the API. Typical categories include:

- Private APIs—Only used within an organization to connect or share information between the organization’s internal systems or applications.

- Partner APIs—Promoted but only available for approved business partners. Only the approved business partners may connect to the API.

- Public APIs—Available to any third-party developer. Frequently, the technical specifications and address of the software interface are published to the public.

For purposes of our discussion, we will focus on public APIs, as the APIs required for third-party applications by the new interoperability rules are of this type. However, there are requirements for partner connections as well.

Developers sometimes categorize APIs as open or protected APIs. Open APIs are available for use by anyone through the Web, just like any typical web page. Protected APIs require authentication and authorization to access. Both of these types are relevant to this discussion as both types appear in the new required health care APIs.

Understanding a specific API requires a review of its reference literature such as:

- API Documentation—A human-readable reference guide on how to use the API. The documentation might include tutorials and examples to assist a developer in getting started.

- API Specification—Intended for humans to read but is more specific on how the API functions and the result one gets from its use. Typically, the developer will write the specification in an API specification language like OpenAPI or Restful API Modeling Language (RAML) that facilitates API definition through the use of a machine-readable format.

- API Definition—Similar to the API Specification except always in a machine-readable format.

Together these references are intended to facilitate the understanding and use of the API by programmers. Some or all of this documentation will make up the Technical Specification of the API.

A brief history of APIs

APIs have been around for a long time. It’s not clear when the first private or partner API launched. It probably goes back to the early days of software-based systems. Public APIs are a bit more recent and developed as a result of the Internet becoming more accessible to the general public. Salesforce is given credit for launching the first web API in February of 2000, and eBay followed with the first public API, also web-based, in November of 2000.

APIs developed and made available by web-based companies like eBay, Salesforce, Google, and social media companies like Facebook and Twitter have propelled APIs into mainstream use. The number of public APIs has been growing exponentially since 2000 and now number in the tens of thousands. The rise of smartphones and their applications, many of which take advantage of public APIs, continue to fuel development and users’ expectations that they should have easy access to information everywhere.

The APIs made available by the Web and social media companies referenced above are called web APIs. They are made available at specific locations on the Internet known as URIs, or uniform resource identifiers, and accessed using the hypertext transfer protocol (HTTP) and hypertext transfer protocol secure (HTTPS).

Web API protocols and standards

As the Web evolved from static HTML pages to what it is today, so has the technology, protocols, and standards required to support it. The Web has gone from the simple exchange of static information to simple web services to today’s complex ecosystem and app economy. Each evolution posed different challenges, and new standards and protocols emerged to address them.

In the late ‘90s and early 2000s, web APIs comprised the exchange of documents formatted in Extensible Markup Language (XML) using Remote Procedure Calls

(RPC). XML, developed by the World Wide Web Consortium, is a standard structured way of encoding documents that is readable by humans and machines. RPCs are, as the name implies, when one computer program calls or executes a procedure in another location typically on another device where a procedure represents a package of code that performs a task.

XML and RPC led to the development of the Simple Object Access Protocol or SOAP. SOAP offered some additional capability to XML-RPC and supported what became known as web services, which hit the hype curve in the early 2000s. SOAP is primarily used in machine-to-machine connections as opposed to server to web browser.

The next significant evolution was the appearance of AJAX in 2005. AJAX stands for Asynchronous JavaScript And XML. AJAX focuses more on the server-to-client (web browser) relationship. Before AJAX, an individual would load a web page and submit some information that would cause another web page to load. AJAX allowed the dynamic interaction with parts of a web page without reloading the main page, making the experience much more active like an application. This evolution was known as Web 2.0.

To improve the performance of the interaction with the web server when using AJAX, developers began to switch from XML to JSON, JavaScript Object Notation, when using AJAX. The growth in JSON led to the development of JavaScript programming libraries like jQuery that made the use of AJAX and web APIs relatively easy, further propelling their growth.

While this evolution in standards was occurring, the architectural style of REST, Representational State Transfer, was also evolving. Originally, published by Roy

T. Fielding in his 2000 Doctoral Dissertation, REST described a different way of interaction that more fully takes advantage of HTTP/s methods. Methods define the nature of the interaction available. While SOAP calls over HTTP only include GET and POST, REST includes GET, HEAD, POST, PUT, PATCH, DELETE, CONNECT, OPTIONS, and TRACE, providing much more capability. In addition to HTTP/s, REST also provides for responses formatted in XML and JSON. APIs following the REST

architecture are known as RESTful APIs. This type of API is now prevalent and common both for web pages and mobile applications.

Web APIs continue to grow and evolve today, often driven by the needs of large web and social media companies. For example, in 2015, Facebook announced a new framework called GraphQL using a graph schema where both the data and relationships between the data are modeled. More recently, Google announced a new RPC style API called gRPC, which focuses on improved performance when used with large volumes of data.

Brief history of healthcare APIs

For much of history, interoperability in health care meant carrying, mailing, and eventually faxing paper records between organizations. Interestingly, these methods can still be found today. Although not as common as they used to be, these historical methods persist. Cases of records faxed to the wrong number and paper records not disposed of properly—being found in basements, garbage bins, and other sorted locations—still occur on a somewhat regular basis and provide evidence of this.

These methods are not the most efficient, and health care IT developers understand this. However, they also understand the power of controlling the flow of data. As the adoption of EHRs became more common, the next stage in the evolution of interoperability was proprietary APIs. Health IT developers began implementing proprietary APIs to which they could control access to the systems they sold and developed. Controlling access allowed them to make strategic business decisions on how and when to allow access to the APIs and underlying systems and information.

The industry was not ignorant of the limitations and costs of the proprietary-only approach to APIs. 2010 saw the passage of the Affordable Care Act (ACA). The ACA included funding for grants focused on providing better care at a reduced cost. One recipient of such an award was SMART Health IT. The project operates out

of the not-for-profit institutions, Boston Children’s Hospital Computational Health Informatics Program and the Harvard Medical School Department for Biomedical Informatics. With their $15M grant, SMART Health IT began the development of an open, standards-based technology platform that enables innovators to create apps that seamlessly and securely run across the healthcare system. They also supported the development of several applications targeted at healthcare professionals demonstrating the potential of standardized APIs.

Nevertheless, it wasn’t until the 2013 JASON study that the government began to address the problem. Significant health IT developers also started to see the writing on the wall at this time and collaborated to drive and direct future legislation. The Argonaut Project, discussed below, is a leading example of this collaboration.

HHS created the JASON Task Force in 2014 to respond to the findings of the JASON report. The JASON Task Force criticized the JASON study, claiming it did not capture all the progress made; nevertheless it agreed with the significant findings around lack of interoperability. These reports set the stage for the Cures Act and the Final Rules.

Also, in 2013, Health Level 7 International (HL7), the health care information data exchange standards organization, partnered with SMART Health IT on the FHIR (Fast Healthcare Interoperability Resources) development effort, which led to the 2014 release of the SMART on FHIR standards-based interoperable apps platform for electronic health records.

That same year the HL7 Argonaut Project was started in response to the JASON and JASON Task Force reports with sponsorship from a list of prominent health IT developers and providers.

“The purpose of the Argonaut Project is to rapidly develop a first-generation FHIR-based API and Core Data Services specification to enable expanded information sharing for electronic health records and other health information technology based on Internet standards and architectural patterns and styles.”

HL7 FHIR Argonaut Project, HL7.org, HL7 International

This effort proved the most successful by the developer community to get in front of the government response to JASON. The work of the Argonaut Project plays a large part in the determination of standards within the ONC Final Rule.

The new interoperability APIs

The ONC Final Rule establishes new criteria for a standardized API for Patient and Population Services. The Rule requires that organizations make available the US Core Data for Interoperability (USCDI), a defined data set using specific standards and implementation specifications for the APIs. At this time, the requirement is only “read” access; in other words, information need only be able to be retrieved, not altered or added. However, that is likely to change in the future. The standard and implementation specifications for the API include:

- Standard. HL7 Fast Healthcare Interoperability Resources (FHIR) Release 4.0.1

- Implementation Specification. HL7 FHIR US Core Implementation Guide STU 3.1.0

- Implementation Specification. HL7 SMART Application Launch Framework Implementation Guide Release 1.0.0, including mandatory support for the “SMART Core Capabilities”

- Implementation Specification. FHIR Bulk Data Access (Flat FHIR) (v 1.0.0: STU 1), including mandatory support for the “group-export” “OperationDefinition”

- Standard. OpenID Connect Core 1.0 incorporating errata set 1

The standard and specifications are described in more detail below.

HL7 is a nonprofit organization that first appeared in 1987. HL7 develops standards for the exchange, integration sharing, and retrieval of electronic health information in support of clinical practice. Given the critical role they have played defining and structuring data in health care IT, it is no surprise that HL7 plays a significant role in the required API standards and implementation specifications, as HL7’s standards are already in everyday use.

HL7’s Fast Healthcare Interoperability Resources (FHIR) Specification is “an interoperability standard intended to facilitate the exchange of healthcare information between healthcare providers, patients, caregivers, payers, researchers, and anyone else involved in the healthcare ecosystem. FHIR consists of two main parts—a content model in the form of ‘resources’ and a specification for the exchange of these resources in the form of real-time RESTful interfaces as well as messaging and Documents.” HL7 published the first draft of the FHIR Specification in 2014, and it is now on version 4.

The HL7 FHIR US Core Implementation Guide STU 3.1.0 defines the minimum conformance requirements for accessing patient data. The Guide defines two US Core Actors:

- US Core Requestor: An application that initiates a data access request to retrieve patient data. The Requestor can be thought of as the client in a client-server interaction.

- US Core Responder: A product that responds to the data access request providing patient data. The Responder can be thought of as the server in a client-server interaction.

The Guide uses conformance verbs—“shall,” “should,” and “may”—to describe the requirements. These conformance verbs are further defined as:

- Shall—an absolute requirement for all implementations

- Shall Not—an absolute prohibition against inclusion in all implementations

- Should/Should Not—A best practice or recommendation to be considered by implementers within the context of their particular implementation; there may be valid reasons to ignore an item, but the full implications must be understood and carefully weighed before choosing a different course

- May—This is truly optional language for an implementation; can be included or omitted as the implementor decides with no implications

The Guide provides references and linkages to the other implementation specifications such as SMART on FHIR, OAuth, and OpenID Connect as well as diagrams and example JSON snippets.

The HL7 SMART Application Launch Framework Implementation Guide Release 1.0.0 (the Framework) is the result of the ongoing collaboration between SMART Health IT and HL7. “The Framework supports apps for clinicians, patients, and others via a Personal Health Record (PHR) or Patient Portal or any FHIR system where

a user can give permissions to launch an app.” Authorization is accomplished by leveraging OAuth 2.0.

Authentication and authorization are two crucial, often confused security concepts. Authentication is verifying one’s identity. Authorization is confirming whether one should have access to the requested system or information. The ONC standard for authentication, OpenID Connect, is discussed below. The authorization standard, OAuth 2.0 is referenced within the HL7 SMART Application Launch Framework.

OAuth 2.0 is an open protocol that allows for secure authorization. What makes it unique is it specifies a process for resource owners to authorize third-party access to server resources without sharing their username and password with the third-party app.

There are several different use cases for deploying OAuth 2.0. For example, let’s assume a user (resource owner in OAuth 2.0) has selected a third-party health care application from the Apple store and downloaded it to her phone (the Client in OAuth 2.0). She opens the app and receives a message that to connect her application with her health insurance provider, she should select her insurer from the dropdown list. The user selects her insurance provider, and the application redirects her to an Authorization Server maintained by the health insurance provider. The Authorization Server asks for her username and password she uses for the provider’s customer portal. The insurance provider validates the username and password. If accepted, the user gets a message asking if she wants to allow the third-party application access (Consent in OAuth 2.0) to her health care information (Scope in OAuth 2.0). If the user grants consent, the Authorization Server sends the third-party application an authorization code. The app then sends the code back to the Authorization Server, and if the Authorization Servers determines its valid, it sends the app an access token in return. The third-party application then provides the access token through the API to a Resource Server maintained by the provider. The Resource Server validates the access token, and if it passes, the Resource Server uploads the requested health care information to the application.

OpenID Connect provides an identity layer that sits on top of the OAuth 2.0 protocol. It facilitates the secure authorization by first providing authentication. OpenID Connect allows third-party applications to verify the user’s identity based on the authentication performed by the Authorization Server, as well as obtain basic profile information about the user in an interoperable and REST-like manner.

The FHIR Bulk Data Access (Flat FHIR) (v 1.0.0: STU 1) specification, including mandatory support for the “group-export” Operation Definition, is a bit different in that it is a standard around the exchange of large volumes of data about groups of individuals. This specification targets payer-to-provider and provider-to-provider exchanges.

Privacy and security risks of APIs

The protection of electronic information requires both security and privacy controls. IT security controls can only lock down electronic information within the system itself, and even then, only so much so before it is unusable. Eventually, to be useful, someone has to see the information. Therefore, we need privacy controls to regulate the further use and disclosure of personal information if we want to protect it. This need for both privacy and security is why the HIPAA Privacy and Security Rules work together to protect electronic health information.

Commenters raised privacy and security concerns immediately upon HHS first publishing the proposed rules. More specifically, commenters recognized that HIPAA would likely not cover third-party application developers targeting the patient APIs. Without the protections of HIPAA, the commenters proposed that the information provided to the app developers would be vulnerable, from an IT security perspective and a privacy perspective. Of specific concern to commenters was the potential exploitation of the information by third-party application developers in ways that are counter to the app users’ privacy expectations.

HHS’ response to this concern, published with the Final Rules, is that individuals are entitled to their health information. The public is now much more familiar with technology and, in particular, applications provided for use on devices such as smartphones. The Federal Trade Commission (FTC) enforces violations of privacy policies, and there are state consumer protection regulations as well. Furthermore, organizations are welcomed and encouraged to offer education and awareness training to the public on the use of third-party applications and the risks to the security and privacy of their health information. However, organizations should not actively prevent (information blocking) an individual’s use of a third-party application except as provided in the regulations.

IT security risks are typically described as the likelihood that a particular threat exploits a specific vulnerability given the security controls in place and the impact on the organization if that were to happen. The risk created through the implementation of an API is highly dependent on the environment in which the API was developed, implemented, and is maintained. Nevertheless, there are common threats, vulnerabilities, and controls associated with APIs that are informative of the type of risks to health information posed by APIs.

For this discussion, it is essential to remember that the APIs in question provide access to repositories of personal health information. Therefore, when considering the impact of a breach, at a minimum, the breach is likely to reveal one individual’s health information and, at worst, the health information of all individuals maintained by the organization.

Typically, when the general public thinks of IT security threats, they think of hackers. This thought is not incorrect, as hackers are threat actors that an organization must consider when attempting to understand IT risk. They, however, are not the only threat actors. There are others, depending on the scenario, to consider, such as careless software developers and IT administrators, along with IT system users and potential vendors.

Organizations should evaluate all of these threat actors when looking at the risk posed by the new health care APIs. It is a virtual certainty that hackers will attempt to exploit the new APIs. Similarly, it is very likely that in developing and implementing the APIs, mistakes will be made either in the software itself or desiging architecture and configuring the infrastructure supporting the APIs. Many organizations will rely on third parties to develop, implement, and maintain APIs, introducing vendors and vendor risk into the mix. Finally, end-users’ actions may also pose a threat.

The specific vulnerabilities in an API that an organization should assess relate to how the particular API is developed, implemented, and maintained. There are, however, several high-level categories of vulnerabilities common in APIs. These are:

Configuration deficiencies—There are typically many components supporting an API, including different types of servers and network devices. Often there will be configuration errors in the components supporting the APIs that make the API and the data it serves vulnerable.

- Software deficiencies—While common standards and specifications help guide how an API should be developed and implemented, the development of an API is not trivial. Often mistakes are introduced into the code during development, resulting in bugs in the code itself. If not discovered and fixed before deployment, these bugs can make the API and the information vulnerable.

- Authentication Deficiencies—Weaknesses in the authentication process, such as transmitting passwords that are not encrypted and readable to anyone monitoring the network traffic, can introduce vulnerabilities to the API and the information.

- Insufficient Capacity—If the developer did not design the API to handle the volume of traffic it receives, the API may become unavailable to users. For example, if there is a distributed denial-of-service attack (DDOS) launched on the API, and the API is not architected to manage the attack, the API will be unavailable to users.

- Password Creation and Distribution Process Deficiencies—Similar to authentication deficiencies, weakness in the process through which passwords are created and distributed creates a vulnerability to the API. For example, if a user can call a help desk and reset a password with limited verification of identity, the API will be vulnerable.

The above is certainly not an exhaustive list of potential API vulnerabilities. However, it begins to illustrate some of the complexity associated with the development, implementation, and operation of health care APIs.

Generating the list of reasonably anticipated threats and vulnerabilities required by OCR’s Guidance on Risk Analysis Requirements under the HIPAA Security Rule can be a daunting task. It is not typically cost-effective for organizations to do this on their own. Instead, leading health care organizations rely on specialists that gather and maintain repositories of threats, vulnerabilities, and controls and provide software to facilitate risk analysis.

Controls, referred to as safeguards under the HIPAA Security Rule, are an essential part of managing IT risk. Security professionals often discuss controls within three categories:

- Administrative Controls—Focused on the human element of security, starting with policies and procedures and including activities like security training and risk analysis.

- Physical Controls—Tangible things that prevent or detect unauthorized access to an area or system or asset. These include locks on doors, fences, key cards, and security desks. Often other devices that limit harm to areas and devices are also included, such as fire protection sprinklers.

- Technical Controls—Hardware and software mechanisms intended to protect software systems and electronic information.

The specific controls an organization chooses to implement should be driven by the unique risks that an organization faces and its risk tolerance. That said, there are standard baseline controls, such as the CIS Top 20 and the NIST Low Impact Baseline, that are good starting points for most organizations.

Today, determining the likelihood of a threat acting on a vulnerability is more art than science. There is no universally available actuarial data that provides sufficient statistical information to make likelihood determinations with verifiable accuracy. Instead, existing methodologies, both qualitative and quantitative, rely on the opinions of experts.

Different threat and vulnerability pairs will have different potential impacts, depending on how they would potentially affect the organization. For example,

the impact of a DDOS attack that prevents access to an API is likely less than if a software bug or configuration error exposes large volumes of patient records to anyone on the Internet.

To understand the impact of a data breach in health care, an excellent place to start is the Ponemon Institute, which does regular research on the cost of breaches. In its most recent report, Ponemon found that a breach in the US health care industry is, relatively speaking, the most expensive breach in any industry in the world with an average cost of $429 per record exposed and $6.5 million total per breach.

Given the risk associated with health care APIs, it is highly recommended that an API undergo technical testing before going live and that it is monitored and tested regularly thereafter. Technical testing should include vulnerability and web application pen testing. This is not simply a best practice, it is also required under the HIPAA Security Rule’s requirements for technical evaluation.

The CMS Final Rule provides that an organization can deny access to a third-party app or developer when the organization determines, consistent with risk analysis under the HIPAA Security Rule, that an unacceptable level of risk exists. The determination must be based on objective, verifiable criteria and applied fairly and consistently across applications and developers. Organizations cannot arbitrarily single out third-party app developers or third-party applications and deny them access. Following a risk analysis, a fair determination must be made based on the level of risk identified.

Also, under the ONC Final Rule, there are exceptions to the information blocking regulations for privacy and security. The Privacy Exception allows for the denial of access to EHI if the access would be a violation of state or federal privacy law. The Security Exception allows for the denial of access to EHI when a consistently applied security practice provides that permitting the access presents a specific risk to the confidentiality, integrity or availability of EHI.

Summing up

The potential of standardized APIs to finally breakdown the stovepipes of electronic health information is clear. This potential is particularly apparent when joined with the new regulations on information blocking. These rules combine to force the holders of electronic health information to make it available and the builders of the health care technology to facilitate the exchange.

Also, quite clear are the risks and potential impacts of building access points into the heart of health care organizations’ most valuable and protected information stores. The health care industry already struggles to protect ePHI, and requiring providers to punch new electronic holes into their core systems further expands the threat surface. Providers must bear both the cost of the APIs as well as the risk.

The Final Rules intend to bring new players into the game in the form of third-party application providers. Many of these players have a history of exploiting user data and allowing significant data breaches themselves. Whether consumers are now wise enough to make informed decisions on using these third parties is not yet clear. It is also not clear if FTC and state enforcement action will be a sufficient deterrent to the third-party application providers to stop them from violating their agreements with their users.

How this will all ultimately play out is yet to be seen. What is likely is that there will be both benefits and failures along the way. The government hypothesizes that improved care and reduced health care costs can be achieved by digitizing health information and demanding interoperability. It appears we might soon have an answer as to whether this hypothesis is correct.

About Jon Moore

Jon Moore is an experienced professional with a background in privacy and security law, technology, and health care. As Chief Risk Officer and Senior Vice President of Consulting Services at Clearwater, Jon works with health care leaders to safeguard their patients’ health, health information, corporate capital and earnings through the creation and development of strong, proactive privacy and information security programs. Together with his colleagues at Clearwater, Jon provides the strategic advice, services, training and tools needed for a complete Cyber Risk Management and HIPAA Compliance solution.

During an eight-year tenure with PricewaterhouseCoopers (PwC), Jon served in multiple roles. He was a leader of the Federal Healthcare Practice, Federal Practice IT Operational Leader, and a member of the Federal Practice’s Operational Leadership Team. Among the major federal clients supported by Jon and his team were the National Institute of Standards and Technology (NIST), National Institutes of Health (NIH), Indian Health Service (IHS), Department of Health and Human Services (HHS), U.S. Nuclear Regulatory Commission (NRC), Environmental Protection Agency (EPA), and Administration for Children and Families (ACF).

Jon holds a BA in Economics from Haverford College, a JD degree from Penn State University’s Dickinson Law, and an MS in Electronic Commerce from Carnegie Mellon’s School of Computer Science and Tepper School of Business.

He can be reached at jon.moore@clearwatersecurity.com.